The compositing of images implies combining multiple images to create a single seamless image. In this tutorial, we will create a simple composite using the

Read and

Merge Nodes. The nodes in the merge category are used to composite two or more images. Before we dive into the tutorial, lets first understand how the

Merge,

Premult, and

Unpremult nodes work.

MERGE NODE

The

Merge node combines two input images based on the transparency (alpha channel) using various algorithms. The alpha channel is used to determine which pixels of the foreground image will be used for the composite. This node takes three inputs:

A,

B, and

mask. The

A input is used to connect foreground image to the

Merge node. This image merges with the image that is connected to the

B input. When you connect an image to the

A input of the

Merge node, an additional

A input will be displayed on it, refer to Figure 1.

|

| Figure 1 Additional input A displayed on the Merge1 node |

Each input is named in the order it was connected with other nodes,

A1,

A2,

A3, and so on. It means that you can connect as many images as you need on the

A side of a

Merge node. NukeX copies data from the

A input to the

B input. If you disconnect the node connected to the

A input, the data stream will still flow down as NukeX will use the

B input. The

mask input is used to connect a node to use as a mask.

The

Merge node connects multiple images using various algorithms such as

multiply,

overlay,

screen, and so on. To add a

Merge node to the workspace, press the M key; the

Merge# node will be inserted in the

Node Graph panel and its properties panel will be displayed with the

Merge tab chosen in the

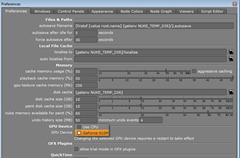

Properties Bin, refer to Figure 2. The options available in the

Merge tab of the

Merge# node properties panel are discussed next.

|

| Figure 2 The Merge# node properties panel |

operation

The options in the operation drop-down are used to set the algorithm to be used for merging the images. By default, the over algorithm is selected in this drop-down. It layers the image sequence connected to the A input over the image sequence connected to the B input according to the alpha channel present in the A input.

Tip: To see the math formula for a particular merge algorithm, place the cursor over the operation drop-down; a tooltip will be displayed. This tooltip contains the information about the mathematics behind a merge operation.

When the

Video colorspace check box located next to the

operation drop-down is selected, NukeX converts all colors to the default 8-bit colorspace before compositing and then outputs them in linear colorspace. You can change the default colorspace for 8-bit files from the

Project Settings panel. To do so, hover the mouse over the workspace and then press S; the

Project Settings panel will be displayed. In this panel, choose the

LUT tab; the

Default LUT settings area will be displayed. Next, select the desired option from the

8-bit files drop-down, refer to Figure 3.

|

| Figure 3 Partial view of the LUT tab of the Project Settings panel |

On selecting the

alpha masking check box located next to the

Video colorspace check box, the image is processed according to the PDF/SVG spec. According to this spec, that the input image remains unchanged if the other composited image has zero alpha. The calculation applied to the alpha will be according to the following formula: a+b-a*b. If this check box is cleared, the formula applied to alpha will be same as that applies to other channels.

Note: This check box will be disabled when it does not affect the operation selected from the operation drop-down or PDF/SVG.

set bbox to

The options in this drop-down are used to set the bounding box. The bounding box defines the area of the frame that is having valid image data. It is used to speed up the processing. By default, full image area is the bounding box of the input image but if you crop a particular input, the bounding box will be reduced to the cropped area. The default option in this drop-down is union, the other three are:

intersection,

A, and

B. These options are discussed next.

union

The union option combines the two bounding boxes from the

A and

B inputs. It resizes the output bounding box to fit the two input bounding boxes completely.

intersection

When you select the

intersection option, the output bounding box will be the overlapping area of the two input bounding boxes.

A

Select the

A option to use the bounding box from the

A input.

B

Select the

B option to use the bounding box from the

B input.

metadata from

The options in this drop-down are used to specify the node whose metadeta will flow down the process tree.

A channels

The options in the first

A channels drop-down are used to specify which channels from the

A input will be merged with the

B input. The options in the second

A channels drop-down are used to specify additional channel (alpha) to be merged with the

B input. If you select

none from the first

A channels drop-down, the output of the

A input will be black or zero. You can select check boxes on the right of the first

A channels drop-down to select individual channels.

B channels

The options in the first

B channels drop-down are used to specify which channels from the

B input will be merged with the

A input. The options in the second

B channels drop-down are used to specify additional channel (alpha) to be merged with the

A input. You can select the check boxes on the right of the first

B channels drop-down to select individual channels.

output

The options in the first

output drop-down are used to specify the output channels after the merge operation. The options in the second

output drop-down are used to specify an additional output channel (alpha) after the merge operation. You can select the check boxes on the right of the first output drop-down to select individual channels.

Note: There are four check boxes on the right of the A channels, B channels, and output drop-downs, namely red, green, blue, and Enable channel, refer to Figure 4. You can use these check boxes to keep or remove the channels from the merge calculations, as required. When the Enable channel check box is selected, the channels selected from the drop-down placed on the right of this check box are enabled. This check box is available in the properties panels of many nodes.

|

| Figure 4 The Enable channel check boxes displayed in the Merge1 properties panel |

also merge

The options in the first

also merge drop-down are used to specify the channels that will be merged in addition to the channels specified from the

A channels and

B channels drop-downs. The options in the second

also merge drop-down are used to specify the additional channel (alpha) to be merged. You can select the check boxes on the right of the first

also merge drop-down to select individual channels. These check boxes appear when you select option other than none in the first

also merge drop-down.

mask

The Enable channel check box located on the left of the

mask drop-down is selected when you connect a mask to the

mask input of the

Merge node or select a channel from the

mask drop-down. The options in this drop-down are used to select the channel that will be used as mask. When the

inject check box is selected, NukeX copies the

mask input to the predefined

mask.a channel. The injected mask can be further used downstream in the process tree. By default, the merge is limited to the non-black areas of the mask. When you select the

invert check box, the mask channel will be inverted and now merge will be limited to the non-white areas of the mask. The

fringe check box is used to blur the edges of the mask.

mix

The

mix parameter is used to blend the two merged inputs. When the value of this parameter is set to 0, only the

B input will be displayed in the

Viewer# panel. The full merge will be displayed when the value for this parameter is set to 1 which is the default value.

PREMULT NODE

The

Permult node is used to premultiply the input image. This node multiplies the rgb channels of the input image with its alpha channel. The alpha channel is used to determine which pixels of the foreground input image will be visible in the final composite. An input image that is not premultiplied is referred to as straight or unpremultiplied. If the black areas in the alpha channel are not black in the color channels, then the image is considered as Straight. Generally, most 3D rendered images are premultiplied. The

Merge node expects premultiplied images so you should use the

Premult node before any merge operation if input image is not premultiplied. This helps in removing artifacts such as fringes around a masked object. While color-correcting a premultiplied image, you should first connect an

Unpremult node to the image and then perform color-correction. Next, connect a

Premult node to get back to original premultiplied state for the merge operations. To add a

Premult node to the

Node Graph panel, select

Premult from the

Merge menu of the

Nodes toolbar; the

Premult# node will be inserted in the

Node Graph panel and its properties panel will be displayed with the

Premult tab chosen in the

Properties Bin, refer to Figure 5. The options available in the

Premult tab of the

Premult# node properties panel are discussed next.

|

| Figure 5 The Premult1 node properties panel |

multiply

The options in the first

multiply drop-down are used to set the channels (generally rgb) to be multiplied with the alpha channel. To select the individual channels, you can select the check boxes available on the right of the multiply drop-down. The options in the second

multiply drop-down are used to set the additional channel to be multiplied with the alpha channel.

by

If you select the Enable channel check box located on the left of the by drop-down, the channel set in it (generally alpha) is multiplied with the channels set using the

multiply drop-downs. The

invert check box is used to invert the output of the alpha channel.

UNPREMULT NODE

The

Unpremult node is used to divide the rgb channels of the input image by its alpha. To add a

Unpremult node to the

Node Graph panel, select

Unpremult from the

Merge menu; the

Unpremult# node will be inserted in the

Node Graph panel and its properties panel will be displayed with the

Unpremult tab in the

Properties Bin, refer to Figure 6. The options available in the

Unpremult tab of the

Unpremult# node properties panel are discussed next.

|

| Figure 6 The Unpremult1 node properties panel |

divide

The options in the first

divide drop-down are used to set the channels (generally rgb) to be divided with the alpha channel. To select the individual channels, you can select the check boxes available on the right of the

divide drop-down. The options in the second

divide drop-down are used to set an additional channel to be divided with the alpha channel. The function of the by and

invert check boxes is same as discussed in the

Premult node.

TUTORIAL

In this tutorial, we will create a simple composite using the

sunset.jpg,

tree.png, and

man stading.png files. Figure 7, 8, 9, and 10 display the

sunset.jpg,

tree.png,

man standing.png, and the final output, respectively.

|

| Figure 7 The sunset.jpg image |

|

| Figure 8 The tree.png image |

|

| Figure 9 The standing man.png image |

|

| Figure 10 The final composite |

Step - 1

In your browser, navigate to

http://www.sxc.hu/photo/1252649; a image will be displayed. Next, download and save the image with the name sunset.jpg to your hard drive.

Step - 2

Navigate to the following link

http://www.mediafire.com/download/ob9on43alamlk0l/nt004.zip and download the zip file which contains the

png files. Next, extract the content of the zip file to the location where you have saved the

sunset.jpg.

Step - 3

Start Nuke and then create a new script by choosing

File > New from the menu bar.

Step - 4

Hover the cursor over the

Node Graph panel and press S; the

Project Settings panel is displayed in the

Properties Bin. Make sure the

Root tab is chosen in it and then select

NTSC_16:9 720x486 1.21 from the

full size format drop-down.

Next, you will import images to the script.

Step - 5

Choose the

Image button from the

Nodes toolbar; the

Image menu will be displayed. Next, choose

Read from this menu; the

Read File(s) dialog box will be displayed. In this dialog box, navigate to the location where you have saved the

sunset.jpg and then choose the

Open button; the

Read1 node will be inserted in the

Node Graph panel.

Step - 6

Make sure the

Read1 node selected in the

Node Graph panel and then press 1 to view the output of the

Read1 node in the

Viewer1 panel.

Step - 7

Choose the

Transform button from the

Nodes toolbar; the

Transform menu is displayed. Next, choose

Reformat from this menu; the

Reformat1 node will be added to the

Node Graph panel and a connection will be established between the

Read1 and

Reformat1 nodes.

Step - 8

Import

tree.png and

man standing.png files to the script, refer to step 5, the

Read2 and

Read3 node will be added to the

Node Graph panel.

Step - 9

Make sure the

Read2 node is selected in the

Node Graph panel and then press 1; the output of the

Read2 node is displayed in the

Node Graph panel. In the

Read tab of the

Read2 properties panel, select the

premultiplied check box.

Step - 10

Make sure the

Read3 node is selected in the

Node Graph panel and then press 1; the output of the

Read3 node is displayed in the

Node Graph panel. In the

Read tab of the

Read3 properties panel, select the

premultiplied check box.

Step - 11

Select the

Reformat1 node in the

Node Graph panel and then press 1 to view the output of the

Reformat1 node in the

Viewer1 panel.

Step - 12

Select the

Read2 node in the

Node Graph panel and then press M; the

Merge1 node will be inserted in

Node Graph panel and a connection will be established between the

Read2 and

Merge1 nodes.

Step - 13

Drag the

Merge1 node onto the pipe connecting

Reformat1 and

Viewer1 nodes. Also, the output of the

Merge1 node will be displayed in the

Viewer1 panel.

Step - 14

Insert a

Reformat node between the

Read2 and

Merge1 nodes.

Step - 15

Select the

Reformat2 node in the

Node Graph panel and then press C; the

ColorCorrect1 node is inserted between the

Reformat2 and

Merge1 node.

Step - 16

In the

ColorCorrect tab of the

ColorCorrect1 node properties panel, enter

0 in the gain field.

Step - 17

Select the

Read3 node in the

Node Graph panel and SHIFT select the

Merge1 node. Next, press M the

Merge2 node will be inserted in the

Node Graph panel and a connection will be established between the

Merge1,

Merge2, and

Read3 nodes.

Next, you need to crop and scale down the output of the

Read3 node.

Step -18

Select the

Read3 node in the

Node Graph panel and then choose the

Crop node from the

Transform menu; the

Crop1 node will be inserted between the

Read3 and

Merge2 nodes.

Step - 19

In the

Crop tab of the

Crop1 node properties panel, enter

120,

335,

955, and

1780 in the box

x,

y,

r, and

t fields, respectively.

Next, you will scale down and position the output of the

Crop1 node.

Step - 20

Select the

Crop1 node in the

Node Graph panel and then add

Transform node from the

Transform menu; the

Transform1 node will be inserted between the

Crop1 and

Merge2 nodes.

Step - 21

In the

Transform tab of the

Transform1 node properties panel, enter -

853 and

-845.4 in the

translate x and

y fields, respectively.

Step - 22

Enter

0.066 in the

scale field.

Next, you will apply an overall color-correction.

Step - 23

Select the

Merge2 node in the

Node Graph panel and then press C; the

ColorCorrect2 node is inserted in the

Node Graph panel and a connection is established between

Merge2 and

ColorCorrect2 nodes.

Step - 24

In the

ColorCorrect tab of the

ColorCorrect1 node properties panel, expand the

master area, if already not expanded. Next, choose the

Channel chooser button corresponding to the

gamma parameter and then enter

0.7614 and

0.48 in the

g and

b fields, respectively.

Step - 25

Save the composition. Figure 11 shows the node network used in the script.

|

| Figure 11 The node network used in the script |